Results

As was stated in the introduction, four areas were being looked at when trying to train the neural network. These were obstacle avoidance, following the road, obeying the traffic lights, and path finding, which will now each be looked at in turn.

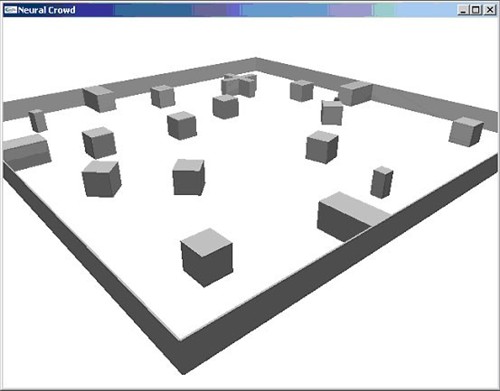

Obstacle avoidanceThe first goal was to make sure the neural network could control an agent by analysing the pixel values. Therefore, a very simple world was created, which was simply four enclosing walls with different sized obstacles distributed within them. There was neither floor nor ceiling, and the walls and obstacles were all the same dark colour. Figure 5 shows an overview of the world created for this test.

|

Figure 5: a simple world to test obstacle avoidance.

| |

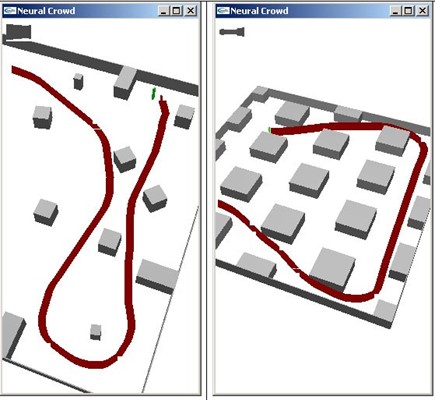

The results from this test were very promising. After only a few minutes of training the neural network was able to steer the agent around the room, successfully avoiding all obstacles. Furthermore, the agent was able to be put in a world with a different layout and successfully navigate around the obstacles without any further training. Figure 6 shows an example of the path taken by the agent in each of the two worlds.

|

Figure 6: examples of paths taken by the agent. The red lines show the path taken. The image on the left is the world that they agent was trained in, while the image on the right is a world that the agent had not seen before.

| |

The neural network had learnt to not worry about objects that were far away from it, or to the sides of it. It learned that when an obstacle was close, it needed to turn left or right, depending on what was to the left or right of it. Because the neural network had no information about the objects other than what it saw, it obviously learnt to categorise an object as being near or far.

This type of obstacle avoidance is fundamentally different from others, where the agent has knowledge of the position and size of the objects. In those systems, the path taken by the agent is arrived upon by mathematically analysing the situation, which can give optimal solutions when the world follows certain assumptions. However, they are normally rather simplistic, ignoring much of the reasoning that a human would use. For example, if there is a gap between two obstacles, can the agent fit through it? This not only requires calculating the distance between obstacles, but also it requires reasoning about the shape and size of the agent. And what if one of the obstacles was rotated, on a lean, or of an irregular shape? Hand coding a function to take care of all the possible contingencies would be extremely difficult for a number of reasons, not least of all because we may not be able to identify all the rules we use as humans when performing obstacle avoidance, so training the agent by example seems ideal for obstacle avoidance in an arbitrary environment.

Road followingThe next goal was to test the neural network's ability to follow the road, which was similar to the goal in [1]. The agent was trained to drive down the centre of the road, so it was presumed that the neural network would identify the white lines of the road, and steer the agent so that the white lines were centred in the middle of the road. It would need to pass straight through intersections, ignoring the road, and its white lines, perpendicular to it. It would need to turn corners, and the if it approached a T-intersection and had to choose between steering left and right, then it should turn in the direction that was easier (e.g. if it was already steering slightly to the left, then it should turn left).

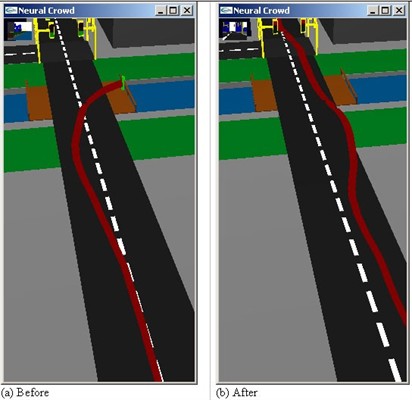

During training, it was important to train the agent in both directions (so that there were plenty of examples of turning both left and right), and it was also important to train the agent how to recover when it was not centred in the middle of the road by temporarily turning off the training, steering the agent away from the middle, turning training back on and then recovering. Commandeering was also needed to improve the accuracy. Figure 7 shows an example of the agent successfully navigating itself around the roads. Figure 8 shows how the performance was improved using commandeering after it was found that the agent performed poorly around the grassy areas.

|

Figure 7: an example of an agent following the road. The agent was travelling in the counter clockwise direction, only turning when it needed to.

| |

While the agent performed reasonably well, at times it did deviate from the centre of the road. In a situation such as driving on a road, it is very important that the agent does not deviate off course as this may lead to an accident. In cases where the agent has a representation of the roads in its knowledge base, it may be safer to use this representation to calculate the agent's position rather than using a neural network. Nevertheless, the research in [1] proves that with sufficient and high-quality training, a neural network can learn to steer safely on real-world roads.

|

Figure 8: an example of using commandeering to improve performance. On the left is the path taken by an agent when approaching the grassy area. The change in colour of the ground surrounding the road initially confused the agent. The image on the right shows the improvement after retraining (although more training is still needed at this stage to keep the agent in the middle of the road), and illustrates the use of commandeering: the point where the agent gets confused can be seen and corrected, rather than trying to guess where the agent may have problems.

| |

Obeying traffic lightsFor this step, the neural network was used to control the linear acceleration of the agent, with steering being human-controlled. It was hoped that the agent would learn to stop for red lights and go for green lights. The behaviour for orange lights should depend on the current velocity. Furthermore, traffic lights in the distance should be ignored, and the agent should stop only when it gets a certain distance away from the intersection.

It was found that the agent was not able to learn this behaviour at all. It may be that when converted to greyscale, the different colours of the lights were too similar to distinguish between each other. Also, the size of the lights were quite small, and depending on the exact location and orientation of the agent, the lights were always in different parts of the screen, which again would increase the difficulty of this task.

Path findingThe final goal of the project was to use the neural network for path finding. This involved telling the agent to move to a random destination, and when this destination was reached a new random destination was given. This is a more difficult task than the others because the agent must head towards a destination while at the same time avoid obstacles, with these two tasks often conflicting with each other. To make it easier, the agents did not need to stay on the road and they were allowed to walk across the river rather than crossing it by a bridge.

The results were promising but far from perfect. While most of the time the agent was able to avoid obstacles and head in the general direction of the destination, its movements were sometimes erratic, it didn't take the shortest path, and it would sometimes head in the complete opposite direction of what it should have. It seemed that the destination acted as an influence on where the agent went, rather than being its goal to get there.

Several network topologies were tried; from having a single five node hidden layer up to having three hidden layers each with eight nodes. It was found that keeping it simple was the best strategy, with a single hidden layer with seven nodes giving the best performance, whereas having multiple hidden layers often resulted in a strategy for the agent such as "turn left no matter what", causing it to go around in circles.

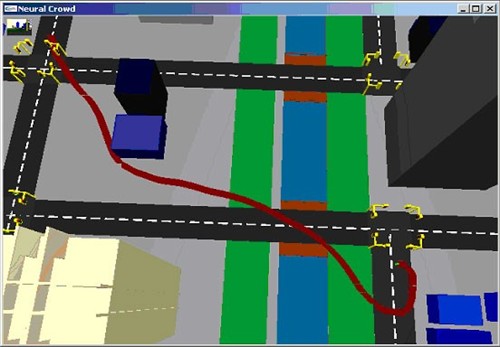

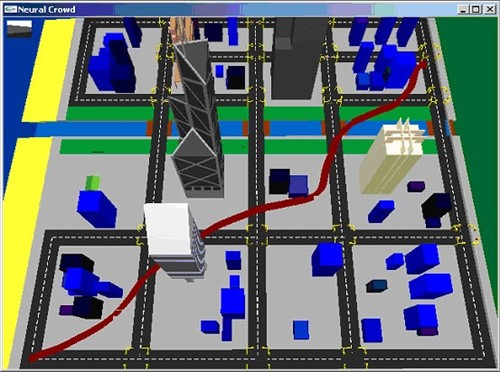

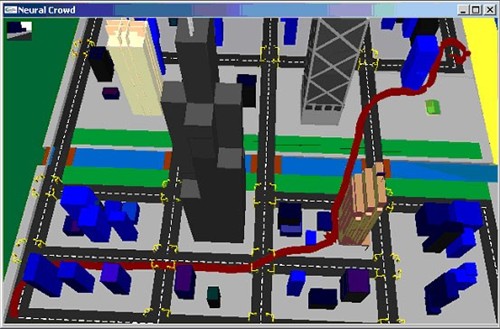

Despite the problems, the fact that the agent normally headed in the correct general direction of the destination showed promise. It looks probable that with better training and more experiments with different network topologies, the agent should be able to successfully path-find its way around the city. Figure 9 shows some example results from the simulation.

Figure 9 (a): The agent started in the top left of the screen, narrowly avoided some buildings, crossed the bridge, but then had a little difficulty in getting to the correct spot as can be seen by the loop at the end of the path.

Figure 9 (b): Long-distance path finding, with the agent starting at the bottom left-hand corner of the screen. One of the features to note is the smoothness of the path, which, in the bottom left-hand side of the screen for example, resembles the kind of obstacle avoidance while heading to a destination that a human pedestrian may perform. In other situations though, it only serves to increase the distance travelled, as in the second half of the path where it should have just headed straight across the river.

Figure 9 (c): More long-distance path finding, with the agent starting at the bottom left corner. Near the middle of the path, next to the brown building, the agent got stuck and had to be helped out. Once again, at the end of the path the agent had trouble getting to the correct spot, doing a loop before finally arriving at its destination.

It would be useful to study this further because if successful, it may be a good way to move an autonomous agent from A to B. In other words, higher-level planning could determine where the agent should go (or in which direction), and this visual based neural network navigation system could be used to get there, with the advantages given earlier such as the neural network's ability to deal with noisy data and a constantly changing environment which is inherent in the real world. Obstacle avoidance on its own is not so useful, because if a different method was used to drive the agent until an obstacle was detected, then the neural network could avoid the obstacle, however it may end up turning the agent in the completely wrong direction. Furthermore there are difficulties in knowing when to turn the obstacle avoidance on and off, or how to combine it with another method, so if the neural network could deal with keeping the agent in the correct direction, then it should be able to smoothly guide the agent around obstacles and then smoothly turn back towards the destination.

|