This section will first go over the implementation of the virtual city in terms of the graphics and collision detection and then over the implementation pertaining to the neural network.

City implementation

The simulation was implemented in C++ using OpenGL and the GLUT extensions. ColDet, an open source library written by Amir Geva , was used for the collision detection.

The objects in the world could be broadly broken into movable objects and static objects, with the former including people and cars and the latter including roads, traffic lights and buildings. Object-oriented programming was used represent the objects, with Car and People objects extending the MovableObject object while the buildings etc extended from the StaticObject object, with both the MovableObject and the StaticObject objects extending the WorldObject object. The WorldObject object contained properties common to all objects such as its position in space and methods such as a draw method, among other fields. The MovableObject object then had properties such as angular and linear velocity and a method to move the object in each time step.

The collision detection software worked using triangles, so all objects in the world were represented using triangles. Rather than hard coding the city into the simulation, a proprietary text file format was created to load in the map. The following shows an example of the map input file:

Road 96.0 0.05 0.0 0.0 90.0 0.0 0.1 0.1 0.1 200.0 0.0025 8.0

TrafficLights -96.0 0.1 40.0 0.0 90.0 0.0 0.1 0.1 0.1 9.0 5.0 9.0 3 600 300 600 0

Model -10.0 0.0 -25.0 0.0 180.0 0.0 0.0 0.0 0.0 18 100 18 boc.model 0

The first line says to create a road, with the following three numbers being the X, Y, and Z location of the centre of the road, followed by the rotation of the road (in this case, it is being rotated 90° around the Y axis), the colour of the road (i.e. the red, green, and blue components between zero and one) followed by the size of the road (in metres).

The next line creates some traffic lights. It has much the same format as the road, however it contains four extra numbers. The first extra number specifies the number of roads coming into the intersection, which in this case is three which means it is a T-intersection. The following numbers specify the phasing of the traffic lights, that is to say each number is an amount of time that the light should stay green for. In this case, one of the lights stays green for only half the time as the other two (the extra zero on the end is not used as there are only three roads coming into this intersection).

Finally, a building is created. The keyword "Model" specifies that this will be a StaticObject object and that a file exists including the model information for the object. The final number on the end specifies whether to use the triangles that will be loaded from the file for collision detection or not. In this case, the '0' specifies that the model should not be used for collision detection, so rather just a simple box which takes up the same space as the model will be used. This means that a complicated model with hundreds of triangles can be drawn on the screen, while only a few triangles are needed for the collision detection, which is obviously much more efficient, but can only work if the model is box shaped. The model files are in another proprietary format. The following shows an example of a model file:

0.5 0.1587 0.5

# the scale factors to multiply it so all the

# numbers are between -0.5 and 0.5

# recommended ratios are 1.0 6.3 1.0

Colour 0.5 0.5 0.5

Box 0.0 0.0 0.0 2.0 1.0 2.0

Rotate 45.0 0.0 0.0

Translate 0.0 1.0 0.0

Triangle -1.0 0.0 -1.0 0.0 5.5 0.0 -1.0 5.0 -1.0

When a model is loaded, it is expected that the dimensions of it are one metre by one metre by one metre (so they can be easily stretched by the amount specified in the map file), which would mean all coordinates in the model file should be between -0.5 and 0.5. However, it may be inconvenient to work with such numbers, especially when creating something such as a tall building where the height is so much greater than the width and depth. Therefore, in the model file any numbers may be used, but when being loaded into the simulation they need to be transformed so that every number is between -0.5 and 0.5. The first three numbers of the model file specify the scaling factor needed for each axis when loading the model to achieve this. In this example, the width and depth are specified as numbers between -1 and 1, while the height is between -6.3 and 6.3 (although anything below zero would mean the building went underground). The "recommended ratios" line is to help when specifying the size of the building when adding it to the map, so that the building is not relatively stretched or compressed in any direction. In this example, the width and depth of the building should be the same, and the height should be 6.3 times the width/depth. The subsequent lines show all the functionality of the model files, which were designed to work in a similar fashion to how OpenGL receives information about vertices. The "colour" line specifies the colour of all subsequent parts of the model; the "box" line creates a box (which is really a shortcut for creating 12 triangles in a box shape) centred at the given (model) coordinates with a specified size; the "rotate" line rotates all subsequent parts of the model by the specified amount around each of the three axes; the "translate" line translates all subsequent parts of the model by the specified amount; and finally the "triangle" line creates a triangle with the three specified vertices.

The simulation is run with the filename of a map file passed in as a command line argument. The program goes through the map file, building up a vector of WorldObject objects. At each time step during the simulation, every object has its "process" and "draw" methods called. Most static objects will do nothing in their "process" method, except for the traffic lights, which work by changing the colours of certain triangles in the model when it is time to change the colours of the lights. The "process" method of movable objects updates the position of the object and checks for collisions. It also runs the neural network to update the rotational velocity, which will be explained more in the following subsection. The "draw" method simply loops through the vector of triangles for the model, simply feeding the vertices to OpenGL, which takes care of the rest (i.e. drawing the 3-D scene onto the 2-D screen buffer, taking care of clipping and perspective mapping etc).

Each agent needs to be able to see the world from its own point of view. Currently, a sub window is created for the agent with a size of 32 x 24 pixels (which has the same aspect ratio of the 640 x 480 pixel window that the simulation defaults to). In each time step then, both the main window display and the agent's display are updated using the same draw method, only from different points of view. The agent can then read the pixel values from its sub window to use as input into the neural network. Using a sub window in this manner is quite restricting because each agent would require its own sub window. If it was found that an agent only needed to run the neural network every x frames, then this could be improved by having each sub window share x agents. This is still not optimal however, so a future improvement would be to use off-screen rendering so that no sub windows would need to be created.

It is important to remember that due to the nature of this project, i.e. controlling an agent based on pixel values, any language and graphics API would be suitable as long as the scene could be rendered from the agent's point of view with perspective, and likewise the representation of objects in the world is not important.

Neural network implementation

A neural network library named FANN (which stands for "Fast Artificial Neural Network") written by Steffen Nissen and Evan Nemerson was used in this project. This library exposes a neural network structure, which each movable object has as one of its properties. The two most important methods are the "train" method and the "run" method.

Training

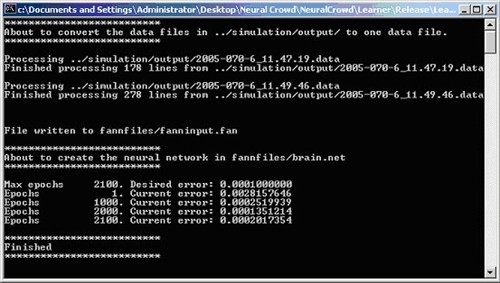

The back-propagation stage is where the neural network gets trained, and takes place in a separate program, called the "Learner". FANN expects all of the training data, where each example is just a space-separated line of the numerical input values with the expected output on the following line, to be in one large text file. The first step of the Learner is therefore to combine all the training data files created by the simulation into one large text file in a format suitable for the neural network software. The next step is to call the "train" method in FANN.

The "train" method takes in the filename of the newly created file containing all the training data, along with the requested network structure (i.e. the number of layers and the number of nodes in each layer), the learning rate, the maximum number of times to train for, and the desired error level to reach. The method then trains the network, writing regular progress reports to the standard output. Upon completion, it creates a ".net" file on the disk which holds information about the trained neural network so that it can be loaded later.

Implementing this part of the project was therefore a very simple task. A command line program was written in C++ that simply combined all the separate training files into one file, and then called the "train" method. The neural network specifications such as the number of layers and nodes were hard coded into the program, so that to change these specifications the program needed to be recompiled. Figure 4 shows an example of the Learner program training and writing a network to the disk.

|

Figure 4: example output of the Learner neural network-training program. In this example two training files are combined to train the network up to 2100 times.

| |