Design

This section gives a high-level overview of the system, starting with an overview of the virtual city, followed by an explanation of the training, which includes some notes on design features included to enhance the performance of the neural network.

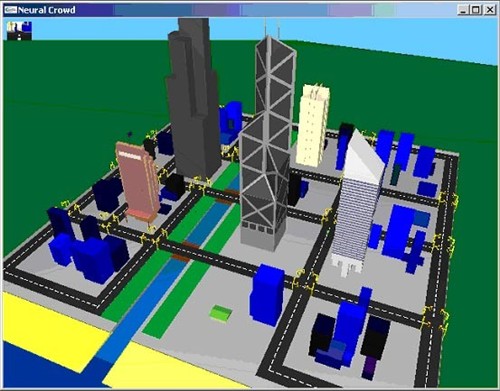

Overview of the cityThe simulation takes place in a virtual city; figure 1 shows an overview of the city. The city was designed to be simple, yet at the same time provide a variety of situations for the agent.

|

Figure 1: an overview of the city

| |

In terms of simplicity, the places that the agent can walk in all take place in the same plane, so there are no hills, steps or slopes etc. While this makes it easier to program, this simplification was decided upon in order to make it easier for the agent to learn. For example, a staircase or a hill in front of the agent may appear to look the same as a wall or an obstacle, so it would take a lot longer for the agent to learn how to distinguish the difference. Banishing this simplification is future work.

Another immediately apparent simplification is the level of detail of the graphics. For example, many of the buildings are simply blue boxes and there are no complicated objects such as trees etc. Again, this was done to keep it simple, and as is explained below, the view from the agent's point of view is very low resolution so little details would not be seen by the agent anyway. Of course if this technology was to be used in a real-life situation, then the simulation should be as lifelike as possible. For the purposes of this project however, the simple graphics used were satisfactory.

What was important though, was the use of perspective so that the agent can learn to distinguish between objects close to it and far away from it. Basic lighting was also used (ambient and diffuse lighting to be specific) as this also gives clues as to the distances of objects. These considerations were important because as we have all experienced, sometimes a large object far away can cast a similar pattern on our retina as a small object close to us, however we are still able to distinguish between the two due to several reasons such as perspective etc.

It is important to note that the agent only knew what it saw, or in other words it had no information about the city, such as a map nor which objects existed where, nor how far apart the different objects were. This is because the object of this project was to investigate how an agent could find its way around a given area where none of this information was available, such as a robot when placed in a before unseen area in the real world.

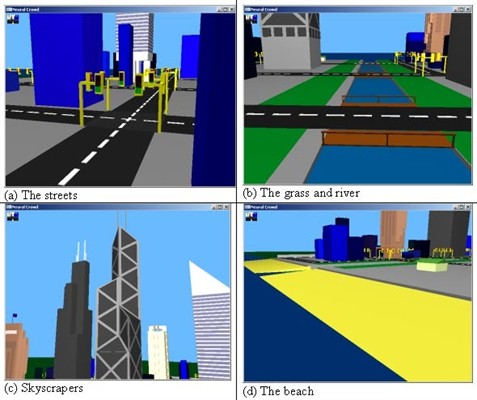

Figure 2 shows several features of the city and why those features were chosen.

|

Figure 2: a selection of features from the city. In (a), a typical street scene is shown. The roads are dark-coloured and include fixed size, white coloured road markings. These help the agent identify where roads are, and to follow the roads. There are also working traffic lights that agents should obey. Finally, the footpaths surrounding the road are light grey. In (b), the grassy area and river are shown, along with the bridges. These exist solely to give more variety to the city so that, for example, an agent will learn to follow the road regardless of whatever is surrounding the road. In (c), the skyscrapers are shown. These are here not only for show as their existence further challenges the neural network due to their size. This is because a distant skyscraper may be the same size and shape as a small, close object, so the network must learn to distinguish between the two. Finally, (d) shows the waterfront, which again exists to provide more variety in the world.

| |

TrainingTraining a neural network is composed of two parts: gathering training data, and back propagation, which is using the gathered training data to modify the weights of the neural network.

Gathering Training DataGathering training data was simply a matter of controlling the agent with the cursor keys. If the goal was to keep the agent in the centre of the road, then the human trainer would simply steer the agent around the city, ensuring that the agent stayed in the middle of the road. Every 10 frames a screenshot was taken from the agent's point of view, and this was saved along with the steering amount and some other information into a text file, which would create one training instance.

When gathering the training data, there were several important points:

- The screenshot taken was very small compared to what would be shown on a computer screen. If the simulation was run at 640 x 480 pixels, then there would be a total of 480,000 pixels to input into the neural network, which would be slow and memory intensive. This high level of detail is not needed when controlling the agent; rather having only around 750 pixels was sufficient.

- Even though the agent's view of the world was much smaller than the trainer's, it was important that the aspect ratio (i.e. the width to height ratio) was maintained. This is because otherwise the human trainer may see and react to some obstacle near the side of the screen which the agent would not be able to see, and so from the agent's point of view the human trainer would be reacting to something which was not there, and so the agent would learn to perform evasive actions, even when nothing was there.

- The agent also sees the world in greyscale. The red, green, and blue pixel values were converted into one greyscale value between zero and one. Due to the three-dimensional nature of colour (i.e. hue, luminance and saturation), allowing the agent to see in colour would mean each pixel would require three values, and hence the already large input size of the neural network would be three times larger. For a task such as obstacle avoidance or path finding, the actual colour of objects is not so important as seeing that they are there. After all, we can watch black-and-white footage of street scenes and know where the road is, where buildings are etc, so this simplification was seen to be perfectly acceptable. When talking about the "blue river" or the "green grass", even though the agent will see them both as grey, they will almost always have different intensities and so the agent will be able to distinguish between them.

- A common problem with this type of training is that because the human trainer is able to stay on the road, almost all the training instances will be of the agent in the middle of the road, where it should be. However, when the agent is using the network to decide how to drive, it is likely that sometimes it will end up on one side of the road, or off the road completely, or heading directly towards an obstacle, all of which were not covered in the training examples. The trainer cannot simply just steer the agent off the road in order to show it how to recover because otherwise the agent will learn to sometimes swerve off the road for no apparent reason. The obvious solution was to allow the trainer to turn the recording of the training on and off. When driving along the road, the trainer could stop recording, move the vehicle to the side of the road, start recording again and immediately correct the agent's position. This allows the agent to learn what to do in such situations without teaching the agent to get into those situations.

- Above it was stated that only every 10th frame was recorded. This was simply done to reduce the number of very similar training instances in the training set. Obviously from one frame to the next, neither the scene nor the steering will have changed much so not only is it unnecessary to record every single frame, but it would slow down the back propagation stage of training.

- Controlling the agent in the simulation is much like controlling a character in a first person shooter game. However, in most games of that type, when either the left or right arrow key is held down, the character turns at a constant velocity until the key is released, when the character immediately stops turning. While this is easy to control, if used as training data there would be only three values: a value for left, a value for no turning, and a value for right. The network would not be able to easily figure out when to turn gently (e.g. when there is an obstacle in the distance) and when to make a hard turn (e.g. when the obstacle is right in front of the agent). Therefore the agent had angular velocity as one of its properties, and pressing the left or right buttons would decrease or increase this respectively. The human trainer could then make long, gentle turns, or sudden sharp turns as required. Using an analogue input device such as a steering wheel would remove this requirement.

- Using a neural network of this type has the problem of remembering what the agent has seen, or short-term memory loss. The problem is not simply that the network can't remember where it has been before, but it can't even remember what happened a fraction of a second earlier. If it was heading straight for a wall, it may decide to turn left, but one frame later the inputs may have changed slightly such that it now decides to turn right. This was a common problem in this simulation, where the agent, approaching a wall straight on, would swerve to the left, then to the right, then to the left and so on until it hit the wall. This was combated by inputting the previous steering value into the neural network, which would allow the agent to "remember" what it was just doing, so in the example above if it decided to turn left, in the next frame the fact that was previously turning left would help to persuade the agent to continue turning left. The forward velocity was also input, just in case this had an influence on the steering.

- When training the agent for path finding, a random destination is calculated, and the goal is to reach that destination while avoiding obstacles. When the destination is reached, a new random one is calculated. The destination was input into the network as the angle between the vector pointing in the direction that the agent is currently moving in and the vector between the destination and the agent's current position. Because the agents and the destinations were at the same height, this angle therefore represented the angle that was needed to turn in order to be moving directly towards the destination (the usual way of calculating the angle between two vectors is to take the arccosine of the dot product of the two normalised vectors. However, just using this formula will not show whether the current movement vector is to the left or to the right of the destination. To achieve this, the "left" vector was defined as the destination vector rotated ninety degrees counter clockwise around the Y-axis. The angle between the movement vector and the left vector was then calculated. If the angle was less than 90°, then it meant that the current movement vector was to the left of the destination vector) . In addition to this, the distance to the destination was also used as input, as the distance may affect the behaviour of the agent when path finding. As in any programming paradigm, “garbage in, garbage out”. In other words, it was very important that the human trainer perform the path-finding job well. Rather than giving the numerical values of the angle and the distance to the destination, a large arrow was displayed at the top of the screen pointing to the destination, and the destination itself was highlighted with a bright red cylinder that extended into the sky. With these two additions, it was always very clear where the next destination was, and hence good training examples could be made. It is important to note that these visual features were not made available to the neural network though.

- All inputs were normalised to be a number between zero and one before being used as input to the neural network, which meant minimum and maximum values had to be defined for each input. For the pixel data, this was easy; zero was black, one was white, and everything in between was grey. Minimum and maximum rotational and linear velocities were defined to make, for example, zero hard left and one hard right. The angle to the destination obviously had minimum and maximum values of negative and positive 180 degrees respectively. The maximum value for distance to destination is not so obvious however, so a value of 300 metres was used as a cap, where any value over that was set to be one (the city had dimensions of 400 x 400 metres) . By normalising all values, any bias about which inputs are more important than others are eliminated, leaving the neural network to find for itself which inputs are the most important.

- While neural networks can tolerate some noisy data, it is of course beneficial to minimise the amount of noisy training data. For this reason, each time training started a new file was created using the current date and time as the filename. During training, the training examples were written to this file, and over several training runs several files would be created. This meant that if the human trainer made a mistake, the mistake could be removed from the training examples by deleting the most recently created training file, rather than having to delete all the training examples.

- The background colour was set to light blue to look like the sky for the human, and pure white for the agent in order to help it to better distinguish between the objects in the background.

Figure 3 shows an example of what the human trainer sees compared to what the neural network "sees".

|

Figure 3: an example of what the human trainer sees (a) compared to what the neural network "sees" (b). The human trainer's window includes at the top of the screen an arrow pointing to the destination, a large red marker showing the destination in the scene (in this case on the left), a visual representation of the current steering value at the bottom of the screen, a red square at the bottom left of the screen meaning that training is in progress, and finally a small window at top left-hand corner of the screen shows what the agent is seeing. The view the agent gets is greyscale and is of a much lower resolution than the trainer's window. It is also without the auxiliary graphical components described above, however the neural network is given the numerical values of the angle and distance to the destination along with the linear and angular velocities.

| |

Back-propagationThe back-propagation stage is where the neural network gets trained. While the purpose of this report is not to explain the theory behind neural networks in any detail, a brief explanation of what happens during back-propagation will be given now. The inputs of the neural network are each linked to all of the hidden nodes in the network, which in turn are all linked to the output node. Each of these links has a weight, so that when inputs are put into the network, they are multiplied by the weights and these become the inputs for the next layer of the network, until the output layer is reached, which will then have a value which is the network's estimated answer.

The training of the neural network is therefore just the job of finding the weightings of the links which give good results, which it does using the training examples provided. It does this by first giving the weights random values, and then going through a loop, with each iteration using one of the training examples. For each training example, the inputs are fed into the neural network and the neural network output is calculated. This calculated value is compared to the actual value supplied in the training example, and the difference between these two values gives information about how the weights need to be changed. For example, if the calculated value is too large, then the weights need to be lowered. The links which already have the largest values are presumed to be the most incorrect, and so those links need to be changed the most, so each of the nodes in the previous layer are given estimates of what they should have been, and these estimates are compared to the calculated values, so that this process can bubble up to the top of the network. This process can continue until either the error of the network is small enough or a predefined number of iterations have run.

Upon completion of back-propagation, the network is written to a text file, which can be loaded later when running the simulation.

CommandeeringWhen providing training data for a neural network there are several aspects to consider. One of these is the amount of training data to provide, which in most cases is unknown until training has been completed and the network can be tested. Another is the need to provide a distribution of training examples which is not biased and which approximates what the neural network will see when used with new data. For example, if in the training data there were significantly more left turns than right turns, then on new data the neural network would be more inclined to turn left even when it should turn right. Without a variety of data the neural network will not learn to generalise or to observe the features of its inputs that are important, or more likely will observe features that are not important. For example in this simulation, when an agent was taught to follow the centre of the road, only turning when it was approaching a wall, but was only taught in the areas of the virtual city where the road was surrounded by the grey foot paths, the agent performed well in areas of the city that it hadn't seen before as long as the foot paths remained. However, when the road went through the section of the city surrounded by green grass and next to the river, the agent got confused, turning wildly to the left and then to the right. While the best strategy for the agent would have been to concentrate on the white lines in the middle of the road, clearly it was also taking into account the areas around the road. In this example, it is quite obvious that while training a greater variety of areas should have been gone over, however it is not always so obvious, for example when avoiding obstacles, there may be some combinations of obstacles together which may confuse the network, without being obvious what those combinations would be to the human trainer.

For these reasons, the idea of “commandeering” was used. After initial training of the neural network, the agent would then be allowed to run in the environment, using its neural network to decide how to react. If the training data was biased to always turn to the left for example, the agent may start to turn left when it should really turn right. At this point, the human trainer can “commandeer” the agent, turning it to the right, and then relinquishing control to the neural network once again. During the time the trainer was controlling the agent, the agent was recording what was happening, adding this new training data to its training set. In this way, the training set becomes less biased, and any unforeseen difficulties or situations that the agent encounters will become covered in the training set.

An old saying says that when a pole is leaning too far to one side, it will lean the same amount in the opposite direction after being "fixed". There is certainly the danger that a biased neural network will become biased in the opposite direction if too much commandeering is performed. The human trainer is relied upon to use their judgment to know when to stop training. If, after some commandeering, the network is retrained with the new data, using the newly created network for subsequent training, then the risk of this happening is diminished greatly. Other methods do exist, such as that used in [1], which used a bounded sized training set. In this case, when a new example was added, the example removed was that which made the average steering direction of all the examples to be straight ahead, which ensured that the neural network was never biased to turn in one direction. No such methods will be used in this project.

|